The Essential A/B Testing Tool Guide

Last updated |

Using an A/B testing tool to improve your website conversion rates and sales? If so, that’s a great start… but you are probably gaining lower conversion lifts than are possible.

Unfortunately many A/B testing tools don’t teach you how to get better results from your A/B tests, and their onboarding efforts are often lacking.

To help improve the results you gain from your A/B testing tool, I have created a high-impact guide that outlines key steps you need to focus on. It’s perfect for beginners, yet also for people who have been A/B testing for a while.

Pretesting: Planning your A/B tests

Did you know that the majority of things impacting the chances of great A/B testing results actually happen BEFORE even logging in to your A/B testing tool? Yes, that’s right, I would go as far to say that over 75% of A/B testing success comes from these 3 factors:

1: Create high impact test ideas. Don’t just pick random things to A/B test, or only test what you or your boss thinks is best. To ensure greater chance of winning test results there are 6 main sources of higher impact test ideas that you should use:

- Web analytics tool insights. This will reveal highest potential pages to A/B test. For a great place to start, check out these best Google Analytics reports for gaining insights.

- Website usability feedback. Use tools like UserFeel.com to gain essential visitor feedback and finding the most problematic pages to A/B test.

- Visitor survey feedback. Use tools like Hotjar.com to get more visitor feedback, including full surveys and page-specific single question polls.

- Expert A/B test ideas. To get the highest quality A/B test ideas you should also get recommendations from CRO experts like myself and others.

- Competitor website reviews. Regularly check what your competitor websites are improving or launching. But don’t just copy them – look to improve what they are doing.

- Previous A/B test results. Don’t just file away your A/B test results and move on to the next idea – you need to learn from them to create better follow-up tests, particularly if they have failed.

You also need to create a hypothesis for each A/B test idea. This is the reason why you are wanting to A/B test that particular element or page, and include why you think it will have a good impact – adding insights from web analytics and visitor feedback is important too.

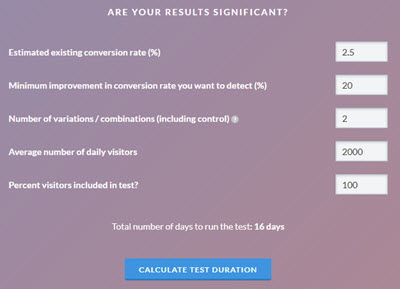

2: Check you have enough traffic to the page you plan to A/B test. If you don’t have adequate traffic (and sales), you will risk wasting time testing – you won’t even get a significant result, let alone a good result! To understand if you have enough traffic you can use calculators like this to see how many days are needed to run a test – and needing anymore than 30 days to get a result is too long.

If you don’t have enough traffic, you should consider using a more common metric like click through rate, or increase the ‘minimum improvement in conversion rate you want to detect’. For more details you should check out these great alternative ways to A/B test and improve your website.

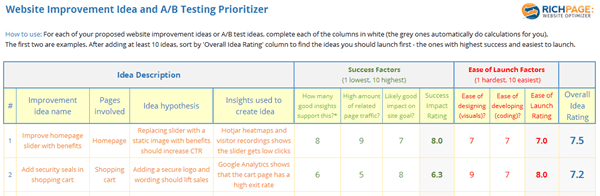

3: Prioritize your tests and try easier, high impact tests. It’s essential you prioritize your A/B test ideas to ensure you get the best impact on your conversion rates. This prioritization should be based on success factors like the number of good insights supporting the idea, and ease of launch factors like how easy it is to code the idea. To help you with this important step you can download my website improvement and A/B test idea prioritizer in my free conversion toolbox (see below for the example).

Don’t just pick tests that have highest impact, because they may be hard to test easily (like your checkout pages) – its key to focus on ideas that are easy and quick to implement, yet still have a likely high impact, for example test ideas for your headlines or images (known as low hanging fruit).

Doing this A/B test prioritization will help you get good results quickly – vital for gaining further buy-in and budget for running A/B tests in the future. And if you pick something hard or risky to implement, it may not give great results and risk derailing your future efforts.

Creating A/B tests in testing tools

Now you know how to get better results even before even using your tool, let’s discuss the most important things you need to remember while creating your tests.

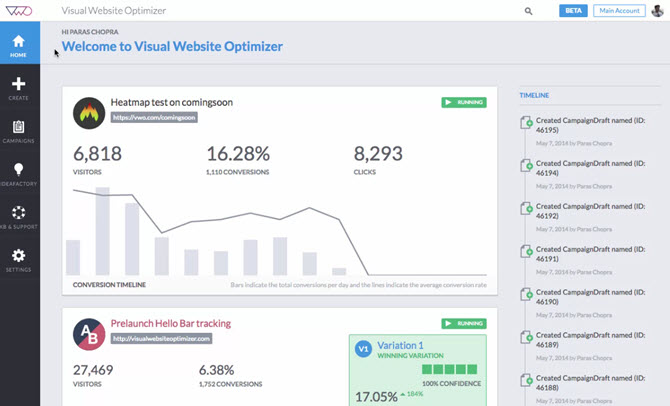

Please note this presumes you already have an A/B testing tool, and I recommend using VWO which is better than the free but limited Google Optimize tool. To help you understand the differences of A/B testing tools like these, check out this A/B testing tool comparison guide I created.

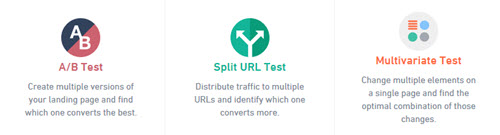

4: Choose your type of website test. When you are creating a test, one of the first things you will be asked is what type of test you want to run. Here are the three main types:

- A/B tests are the most common option and usually best option, and are great for testing variations of a single element like a headline or button. It is best to test between 2-5 different variations (called A/B/n tests) However, if you are testing many things at once on a page, you will find it hard to understand what elements are contributing most to conversion rate success.

- Multivariate tests (MVT) allow you to test many elements per page at once, but require considerably more traffic than an A/B test to run it (it has to test many more combinations of variations). It’s quite rare to have enough traffic to use this type of test and experts often prefer to use A/B testing.

- Split page tests are simple page redirect tests and are very similar to A/B tests. They are best used when the only way you can A/B test a page is by creating and showing a different page (may occur if you have a particularly hard website platform to make changes on easily).

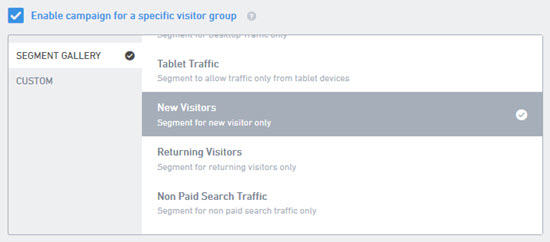

5: Understand targeting in A/B tests to improve conversion rates. You will also see an option to create a personalization test or add test targeting in many A/B testing tools. This isn’t just about showing different content to visitors in different countries or cities – it’s all about using the tool to show more relevant content to different groups of your visitors, like new visitors or repeat visitors (e.g. new user guides, repeat visitor discounts and affinity content). It’s also one of the best ways to push your conversion rates and sales much higher using your A/B testing tool.

You can setup simple visitor groups to target in A/B testing tools fairly easily, using visitor attributes like new visitor, or if they have seen a particular page or product (known as content affinity targeting). Go ahead and think of some high impact visitor groups on your website and try targeting specific A/B test content for them.

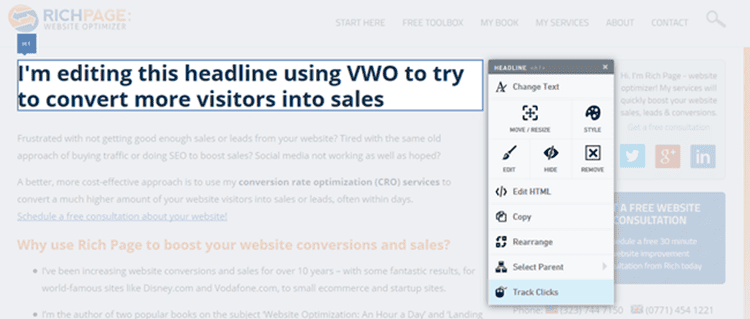

6: Create great variations for the page or elements you want to test. If you are changing simple things like text or imagery, or moving elements, you create your test variation with visual editor in A/B testing tools. You will need to use the code editor for more complex changes though. If you have resources available to you, always seek help from web designers and the marketing team to help you create better, more engaging visual designs for your test variations.

Make sure differences in your A/B test variations are easily noticeable by visitors, or they won’t notice and convert any better than your current version. Also, don’t create too many variations (more than 4), because the more you create, the longer it takes the tool to gain test results (particularly if you are running an MVT).

7: Understand and set up appropriate goals to measure for each A/B test. Next, it’s key that you set up relevant goals to measure success for each A/B test. This depends on what you are testing, and will often involve more than one goal. If you are testing improving your checkout pages, the biggest goal will be order completion. If you are testing your homepage, you will also want to set up goals like click-through rate, and depending what your main site goal is, you will want to add that too (for example generating sign ups).

The most important other thing here is to always try to set up revenue as a goal for each test. This will help you prove test impact on online revenue, not just on conversion rates (your boss and senior executives will care more about revenue!) and this is also essential for helping you show ROI of the tool, vital if you are to be able to gain more budget for future A/B testing. This can be set up fairly easily as goals in VWO and Optimizely. Are you doing this for every test?

8: Add notes in the tool to explain your A/B hypothesis and insight. Many overlook this part of creating tests in the tool – use the ‘notes’ feature to document and share your reasons for each test (in particular the test hypothesis and insights supporting it). This will also help when analyzing test results in the future. In this notes section of the tool you should also add observations about the test result, and possible ideas for future tests (as we will discuss in step #14, the final step).

9: Preview the A/B test you want to launch and perform QA. Next you need to preview the A/B test on your website to make sure everything looks fine for each of the test variations, and nothing is broken. This is known as quality assurance (QA). You should ideally perform this QA on a development version of your website and not your live website – you don’t want your site potentially breaking while people are using it. Don’t forget to check your test in multiple browsers too as this can impact how your variations look, and most tools have built-in tools to help you do this.

And if this is your first A/B test on your website, the tool will remind you at this point to add the A/B testing code snippets on your site and will check if they are present.

10: Launch the A/B test. The last step of creating a test in an A/B testing tool will be the exciting part – hitting the launch button. As soon as you have done this, you should do another round of QA to double check the test variations are working as expected, and also that results are beginning to show up in your testing tool.

Analyzing A/B test results in testing tools

Now your test is up and running, it’s important to know how to analyze the test results. If you don’t do it correctly, you will risk launching a winning version that actually isn’t the best performing one.

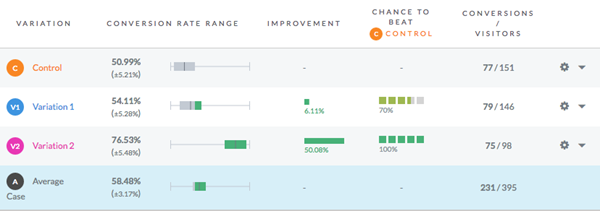

11: Understand what confidence means, respect it – but don’t let it cause paralysis. A/B testing tools run constant analysis to see how each variation is performing and declare winners and losers by using statistical significance models. This significance result (called ‘chance to beat’ in VWO) is in your reports as a percentage for each variation. In layman’s terms, it basically means the higher the percentage confidence, the more likely that if you ran this test again, the same result would occur.

I won’t go into too much detail about how it is calculated, as this great guide will help explain. Ideally you need at least 85% confidence to be sure the tool has found a statistically significant winning version, but don’t let it paralyze you from choosing a winner if you see an amazing lift variation winning, but with only 81% confidence.

12: Gain at least a week’s worth of results before declaring a winner. This is a very common mistake A/B testing tool users often make, with negative consequences. Even if the tool shows high confidence for a winner in a few days, you need at least 7 days worth of results to take into account differences in traffic for days of week, and for fluctuations in winning variations to level out (often you will see one variation start off winning, but eventually dip and another version start winning, as you can see the graph below).

Never declare a winner sooner, or your will risk launching a version that isn’t actually the best improvement on conversion rates, or may even launch a test that actually negatively impacts conversion rates in the following weeks and lowers sales or revenue.

13: Know how to interpret conversion rate results. This is another key thing to understand when analyzing test results. You may be expecting to see 50% or 100% increases in conversion rates for success, but this is not likely. However, even much lower increases can be considered successful, particularly when you equate that lift into additional revenue. Even a seemingly small 2% uplift can have a big impact on revenue! Ideally you should always track revenue uplift too, as mentioned earlier.

The average conversion rate increase for an A/B test is about 8%, with more experienced A/B testers and CRO experts often able to get this much higher for some results. Anything over 2% increase is considered reasonable, above 5% is good, and if you get over 20% that is very good. Getting over 50% is excellent, but its quite hard to get increases like that.

And remember, it’s not only about the percentage conversion rate lift – you need to check the actual numeric increase in conversion rate. For example, a 50% increase actually isn’t as good as it sounds if it’s the difference between a 0.2 and 0.3% conversion rate increase.

After the A/B test has ended

Don’t forget to always do this last essential step after your A/B test has ended, as it feeds back into step 1 and helps improve your future A/B test results.

14: Learn from your A/B test results to create better follow-up test ideas. Don’t just test something and then move on to the next test idea – to gain higher conversion rates you need to learn from the results and run better follow-up tests. And quite often tests won’t generate the results you expect or won’t get a winning result (a study from VWO even revealed that 1 out 7 tests don’t win).

Therefore you should find possible reasons why the test didn’t work as planned, and then create a follow-up test using different test elements or variations. Brainstorming the results with a wider audience including marketing and design teams will help gain great ideas and insights, as will getting visitor feedback relating to the page you ran the A/B test on. I created a detailed article about how to learn more from A/B tests that don’t win, so read that too.

Wrapping up

So there we have it. The essential guide for A/B testing tool success. If you found this useful, please share this with others, particularly anyone that helps run A/B tests (web analysts, designers, developers, online marketers etc). This A/B testing guide is another essential read too.