The Best Google Optimize Alternatives From A CRO Expert

Last updated |

Google Optimize was the most used A/B testing tool. But Google have sunset it and it can no longer be used as of Sept 30th 2023. Many businesses are therefore asking which is the best Google Optimize replacement to switch to.

There are so many A/B testing tools to choose from, but many are either very expensive or not as good as Google Optimize. And unfortunately there are no good free options.

I’m here to help you decide which A/B testing tool to use, as I’ve got 15 years experience in A/B testing.

That is why I have created this guide comparing the two leading Google Optimize alternatives, VWO and Convert.

It provides you with a side-by-side comparison of each tool, and a closer look at each one’s pricing and features.

And as I work so closely with both of these tools, you will also get exclusive deals for upgraded and longer free trials.

Comparing Google Optimize versus VWO and Convert

This comparison table will help you understand the best alternative for Google Optimize to consider.

| Cost rating |

10/10 Free but this tool has been sunset and can no longer be used. | 8/10 Free plan with no targeting, and full features from $424 per month. Starts with 50K users per month. | 9/10 Plans start at $349 per month for their basic plan, including 200K users per month. |

| Test setup experience and usability |

5/10 Good but only using Chrome plugin. Poor A/B test creation user experience. | 9/10 Great A/B test creation process, with very good user experience and onboarding. Simple to use visual editor and advanced code editor. | 8/10 Good, but the user experience is a bit basic. Very good visual editor and code editor. Onboarding could be improved. |

| Testing types available |

7/10 A/B tests, MVT and redirect tests, but no multi-page tests. | 8/10 A/B tests, split tests and multi-page are included in all plans, but MVT only in Growth plan and up. | 9/10 A/B tests, split tests, MVT, and multi-page are included in all plans. |

| Test targeting options |

6/10 Basic targeting. No ability to use Google Analytics segments (only in premium 360 version). | 6/10 Good targeting options, but full options and customized targeting only in their most expensive plan. | 9/10 The most advanced targeting options are included even in lowest level plan. |

| Success metrics | 7/10 Only 3 objectives can be used per test, with limited ways to customize them. | 9/10 Great – good selection of goals and metrics, including revenue. | 9/10 Great – good selection of goals and metrics, including revenue. |

| A/B test reporting |

6/10 Standard reporting, but in-depth analysis can be done in Google Analytics. | 8/10 Good reporting, but results segmentation only in pro plan and up. | 9/10 Very good real-time results reporting and segmentation on all plans. |

| Customer support |

1/10 No customer support offered, only guides and articles in their help center. | 7/10 Email support included in all plans, and phone and live chat in higher level plans. | 9/10 Phone, live chat and email support included in all plans. |

| Overall rating | 7/10 Good for beginners, but lacks advanced features and support options. | 8/10 An excellent tool with a good free plan, but plans with the best features are more costly than Convert. | 9/10 They offer the lowest cost plans, although their support and onboarding is not as good as VWO. |

| Free trial? | Has been sunset and can’t be used anymore | Get an upgraded 45-day free trial of VWO | Get a longer 30-day free trial of Convert |

Now let’s get more detailed, with an overview of each of these tools including pros and cons.

Google Optimize Review

To help you understand how it compared to VWO and convert, it’s worth looking at the notable pros and cons of it.

Google Optimize Pricing:

- Google Optimize: Has been sunset and can’t be used anymore

Google Optimize Pros:

- It was a good tool for free, and was ideal for businesses who only had basic A/B testing needs.

- They had an excellent integration with Google Analytics to help improve A/B testing reporting.

- It was easy to analyze reports in greater depth using by Google Analytics alongside it.

Google Optimize Cons:

- Can only no longer be used as it was sunset on Sept 30th 2023.

- The usability of was lacking and limited, particularly when creating A/B tests.

- Only 5 tests could be run at the same time.

- There was no ability to use Google Analytics segments.

- Only 3 success metrics (objectives) could be used.

- Multi-page testing was not included in the free version.

- Reporting was not real-time, with 6-8 hour delays in data coming through.

- No support was included, only through forums or paid 3rd party consultants or agencies.

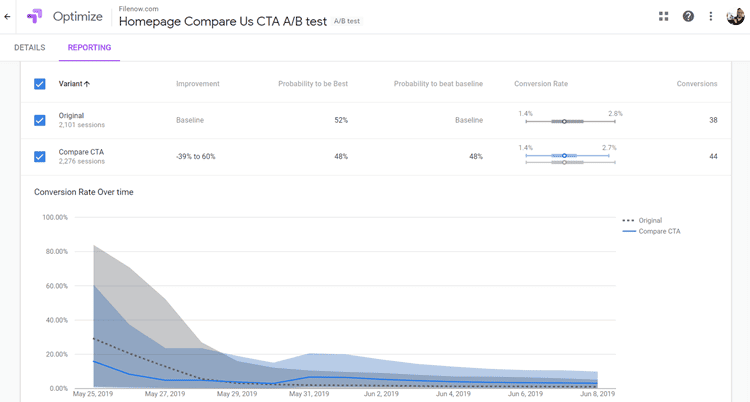

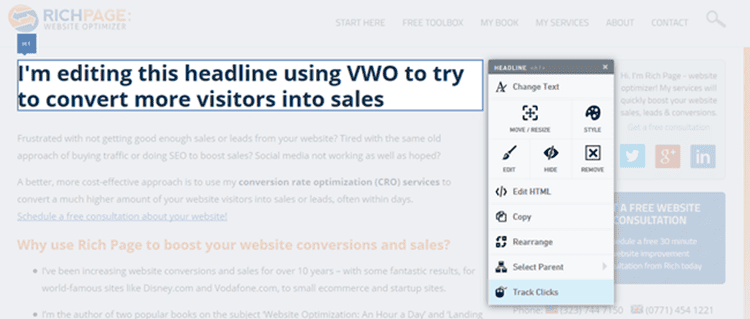

VWO Review

The first Google Optimize alternative for you to consider is VWO. They are one of the pioneering A/B testing tools who launched in 2010. They initially started off as a low cost A/B testing tool, but have recently added many excellent features for advanced users.

In also expanded their offerings to include different plans with additional CRO tools, like heat maps, session recordings and surveys. Those tools are very useful for doing conversion research to create better A/B testing ideas.

VWO Pricing:

They have 4 different plans for their VWO testing tool. Here is the new pricing for 50K users per month:

- Starter: Free but only has URL or device targeting

- Growth: $424 per month billed annually, with full segment targeting

- Pro: $ 975 per month billed annually, includes multi-page campaigns

- Enterprise: $1707 per month billed annually, with API and self hosting

These plan prices increase when you want more than 50K users per month. For example, the Growth plan increases to $652 per month if you want 100K users per month.

They also have these other plans for more advanced needs:

- VWO Full Stack – for doing mobile app and server side testing

- VWO Insights – includes CRO tools for session recordings, survey tools and form analysis

- VWO Personalize – for doing advanced personalization campaigns

- VWO Deploy – lets you deploy winning versions without needing development

- VWO Services – their agency handles all the A/B testing strategy, design and dev for you

Here are the pros and cons of the VWO Testing plan, which is the plan most of their clients choose.

VWO Pros:

- The free plan is great for replacing Google Optimize, and is ideal if you don’t need segment targeting.

- Intuitive user interface with great A/B test design wizard to help you get better results.

- Includes A/B test duration estimator tool which helps you see if you have enough traffic.

- Includes their very good ‘VWO Plan’ for managing and recording insights from A/B tests.

- Great integration between all the tools on their platform, including visitor recordings and surveys.

VWO Cons:

- Pricing is not publicly available, and has increased after moving away from the low cost market.

- While they now offer many CRO tools, the different plans and tools are confusing to understand.

- Advanced targeting or customizatable targets is only available in their most expensive plan.

- Report segmentation (for example by device or traffic source), is only available in pro plan and up.

VWO Rating on G2: 4.3/5 (as of Mar 2024)

Upgraded free trial details: I have arranged an deal with VWO to give you a 45 day free trial instead of 30 days, and you also get 50% more users to test on during the free trial. There is also no credit card needed to try all the features.

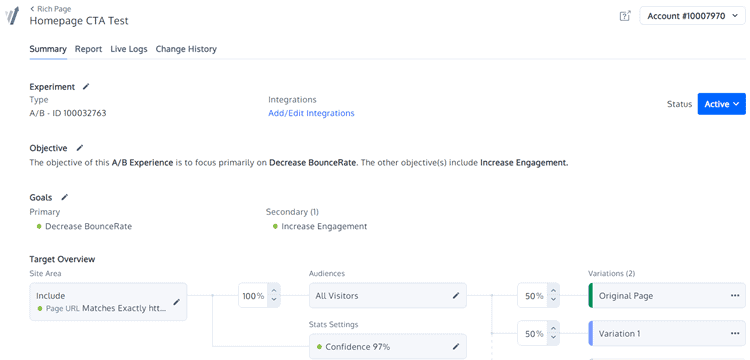

Convert Review

The other leading Google Optimize alternative to consider is Convert. They evolved from being a great low cost A/B testing tool to becoming one most popular A/B testing tools, with all the advanced features you need.

Convert Pricing:

They offer 4 plans, with additional features included in their higher cost plans. Here are the prices as of Mar 2024.

- Basic: $349 per month when paying annually (with 200K tested users)

- Growth: $699 per month when paying annually (with 500K tested users)

- Expert: $1,119 per when paying annually (with 1 million tested users)

- Enterprise: You have to contact their sales team for customized pricing

Convert Pros:

- Great for A/B testing pros, including a very good code editor for CSS and Javascript.

- Has the best value A/B testing tool plan for those who need over 200K users per month.

- The real-time reporting is very good and has excellent interactive graphs.

- It is one of fastest loading tools for website user experience, with no flicker experienced.

- Their knowledge base and FAQ sections are very detailed, with good support too.

- For advanced users, you can switch between Frequentist and Bayesian stats engines.

- They have a helpful tool to import your A/B test history from Google Optimize.

Convert Cons:

- The user experience is a bit basic, and onboarding and help in the tool is limited.

- They increased the cost of their lowest price plan to $349, which may be unaffordable to some.

Convert Rating on G2: 4.8/5 (as of Mar 2024)

Upgraded free trial details: I got their free trial extended from 14 days to 30 days for you – you won’t find this elsewhere. And no credit card is required to sign up and use all the features.

4 Questions To Find The Best Google Optimize Alternative

So what is better VWO or Convert? As you can see from my comparison earlier, they are both very good, but each has its own advantages. The simple answer is that it really depends on your budget and your exact needs.

To find out which tool is best for you, ask yourself these four important questions:

1: How much can you afford? Are you willing to spend at least $349 per month on an A/B testing tool, or will it be hard to get any budget for it?

2: How many users do you need per month? Check how many you need per A/B test using this calculator then estimate how many users per month you need based on how many tests per month you want to do. Bear in mind that often 100K users will only be enough for 1 or 2 tests per month.

3: How advanced are your needs for targeting segments in your A/B tests like for repeat customers or by geographic region?

4: Is cost your only consideration? How important to you is the best user experience, upgraded support, and better onboarding when using an A/B testing tool?

Then review each of these statements to see which Google Optimize replacement is best for your needs:

- If you can’t currently get any budget for an A/B testing tool, and are okay with only doing 1 test per month, use the VWO free plan (unless you want to do targeting, as it only includes targeting by URL or device).

- If you are doing 2 or more tests per month (between 50K-100K of users per month), the VWO free plan will not include enough traffic for you. If you can afford to pay $349 per month, then use the Convert Basic plan as it includes 200K users per month.

- If you are doing 5 or more tests per month, go with the Convert Growth plan of $699 per month which includes 500K users. The VWO cost for 500K users is much more expensive for similar features, at $2199 per month.

- If you need customized or advanced targeting for your A/B tests, VWO is much more expensive as you need their enterprise plan (starting at $1887 per month). Convert includes this even in their lowest cost plans.

- If cost isn’t your only consideration, and you want the best tool user experience, better onboarding and upgraded support, then go with a VWO plan, as they are currently better than Convert (although their user interface is good once you get used to its simplicity).

Both VWO and Convert don’t require credit details to sign up, so I suggest you check both out and see which of their limitations has the biggest impact on you.

Other Google Optimize Alternatives Worth Considering

There are other less popular A/B testing tools you may want to consider as a replacment for Google Optimize. Here are some of the best ones, each with their own strengths.

- Shoplift – if you are using Shopify this is a great low cost tool and lets you create tests directly in Shopify.

- Conductrics – a very good but expensive tool that also offers a visitor survey tool for gaining feedback.

- A/B Tasty – a good tool that also includes useful features for session recordings and heatmaps.

- Optimizely – one of the best tools, but is now enterprise market only after originally being a low cost tool.

- Adobe Target – a tool with very good features, but is very expensive and for enterprise market only.

- Kameleoon – another great enterprise tool that may be worth considering if you have a large budget.

Get a Free A/B Testing Tool Consultation

Still unsure about which A/B testing tool is right for you instead of Google Optimize? I can help review your tool needs with a free CRO and A/B testing consultation, and give you a free CRO teardown with recommendations to improve any page on your website.

I’m a CRO expert with over 15 years of experience. I work closely with Google Optimize alternatives like Convert and VWO, and have increased revenue for 100’s of online businesses with my A/B testing services.

Get a free consultation about which A/B testing to use and a CRO teardown