What to Do When Your A/B Test Doesn’t Win: The Essential Checklist

Last updated |

Unless you are an A/B testing and CRO expert, did you realize that most of your A/B test results won’t get a winning result? You may have even experienced this disappointment yourself if you’ve tried A/B testing.

Some good news though. You can actually do something with these inconclusive A/B tests and turn them into better tests with a much greater chance of succeeding – therefore increasing your website sales or leads without needing more traffic.

Instead of simply throwing away losing A/B tests and hoping to get luckier with your next one, you have a fantastic learning opportunity to take advantage of – that most online businesses don’t know about or do well. But what should do first? And what mistakes should watch out for?

To help you ensure you succeed and maximize your learnings, I’ve put together a handy checklist for you. But before I reveal how to maximize your A/B test learnings and future results, let’s set the scene a bit…

First of all, just how many A/B tests fail to get a winning result?

A VWO study found only 1 out 7 A/B tests have winning results. That’s just 14%. Not exactly great, right?

Many online businesses are talking about disappointing A/B testing results too. While they sometimes got very impressive results, Appsumo.com revealed just 1 out of 8 of their tests drove significant change.

This real lack of winning results can often cause frustration and slow progress with A/B testing efforts, and limit further interest and budget in doing CRO. Very frustrating! The good news is that you can actually gain real value from failed results, as I will now reveal in the learnings checklist.

The A/B Test Learnings Checklist

1: Did you create an insights-driven hypothesis for your A/B test?

A common reason for poor A/B test results is because the idea (the hypothesis for what was tested) was not very good. This is because businesses often just guess at what to test, with no insights being used to create each idea. And without a good hypothesis, you will find it hard to learn if the test fails.

The best indicator of a strong hypothesis is one that is created using insights from conversion research – web analytics, visitor recordings, user testing, surveys, and expert reviews.

To create a better insight-driven hypothesis, you should use this format:

We noticed in [type of conversion research] that [problem name] on [page or element]. Improving this by [improvement detail] will likely result in [positive impact on metrics].

So you can see what I mean, a real example of this would be:

We noticed in [Google Analytics] that [there was a high drop off] on [the product page]. Improving this by [increasing the prominence of the free shipping and returns] will likely result in [decrease in exits and an increase in sales].

So the first step is to check how good your A/B test hypothesis was – how many insights did you use when creating it? The more insights used, the better. Or was it just a guess or what your boss wanted?

If you think your hypothesis was poor or didn’t even have one, you really need to create a better one using conversion research – getting an expert CRO website review is a great place to start.

2: Did you wait 7 days before declaring a result and did anything major change while running it?

A simple yet common mistake with A/B testing is declaring a losing result too soon. This is particularly problematic if you have a lot of traffic and are keen to find a result quickly. Or worst still, the person doing the test is biased and waits until their least favorite variation starts to lose and then declares the test a loss.

To avoid this mistake, you need to wait at 7 days before declaring a result to allow fluctuations in variation results to level off and to also reduce the impact of differences in traffic by day.

And any time you change anything major on your website while a test is running you also need to wait at least an additional 7 days. This extra time is needed for your testing tool to evaluate the impact of this new change (this is also known as test pollution).

If you find this is the case with your failed test, then I suggest you re-run the test again for a longer period, and try not to change anything major on your website during the period you are running the test.

3: Were the differences between variations bold enough to be easily noticed by visitors?

Next you need to check the variations that were created for the test and see if they were really that different for visitors to notice in the first place. If your variations were subtle, like small changes in images or wording, visitors often won’t notice, or act any different, therefore you often won’t see a winning test result. I’ve seen hundreds of A/B tests created by businesses and you will be surprised at how often this mistake occurs.

If you think this may have occurred with your losing test result, re-rest it but this time make sure you think outside of the box and create at least one bolder variations. Involving other team members can help you brainstorm ideas – marketing experts are helpful here.

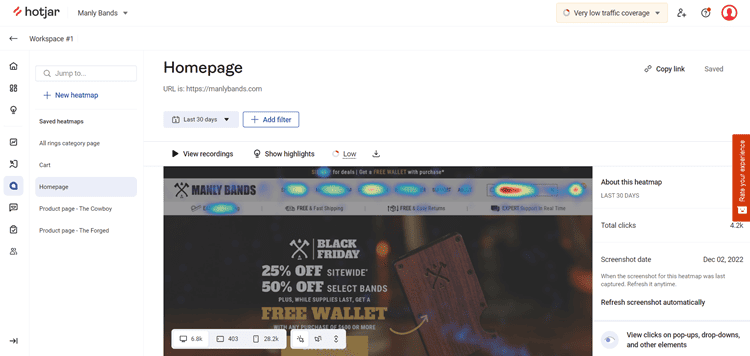

4: Did you review click map and visitor recordings for insights about the page tested?

It’s essential to do visual analysis of how visitors interact on every page you want to improve and run an A/B test on. This helps you visually understand what elements are being engaged with the most or least. This visual analysis is particularly important to double check for pages and elements that relate to your failed A/B tests – you can learn a lot from this. Did your visitors even notice the element you were testing?

The first type of visual analysis are visitor clickmaps that show you heatmaps of what your visitors are clicking on, and how far your visitors scroll down your pages. Even more important are visitor session recordings where you can watch visitors exact mouse movements and journey through your website.

So if you hadn’t done this visual analysis for the page relating to the inconclusive test, go ahead and do this now using a tool like Hotjar.com. You may realize that few people are interacting with the element you are testing or that they are getting stuck or confused with something else on the page that you should run an A/B test on instead.

5: Have you performed user testing on the page being tested, including the new variation?

User testing is essential piece of successful CRO – getting feedback from your target audience is one of the best ways of generating ideas for improvements and A/B test ideas. This should also be performed in advance for your whole website and before any major changes launch.

Therefore, I suggest you run user tests on the page relating to your losing A/B test result to improve your learnings. In particular, I suggest using UserFeel.com to ask for feedback on each of the versions and elements you tested. Ask what they liked most or least, and ask what else they think is lacking or could be improved – this really is excellent for creating better follow up test ideas.

6: Did you segment your test results by key visitor groups to find potential winners?

A simple way to look for learnings and possibly uncover a winning test result is to segment your A/B test results for key visitor groups. For example you may find that your new users or mobile users segment actually generated a winning result which you should push live. This segmenting is possible in any good A/B testing tool like VWO or Convert.

To go one step further, you can actually analyze each of your test variations in Google Analytics to understand differences in user behavior for each test variation and look for more learnings. A web analyst is very helpful for this.

7: How good was the copy used in the test? Was it action or benefit based?

You may have had a great idea for an A/B test, but how good and engaging was the copy (the text) in the test? Did it really captivate your visitors? This is essential to spend time on as headlines and call-to-actions often have big impacts on conversion rate. So if you had changed any text in your test that didn’t win, really ask yourself how good the copy was. For better follow-up test wording, always try testing variations that mention benefits, solve common pain points, and use action related wording.

Most people aren’t great at copywriting, so I suggest you get help from someone in your marketing department or get help from a CRO expert like myself or a copywriting expert like Joanna Wiebe.

8: Did you consider previous steps in the journey – what might need optimizing first?

Another key learning is to understand the whole visitor journey for your A/B test idea that didn’t win, and not just look for learnings in isolation to the page being testing.

This is important because if you haven’t optimized your top entry pages first, you will have limited success on pages further down like the funnel like your checkout. So go ahead and find the most common previous page relating to your failed A/B test, and see if anything needs clarifying or improving that relates to the page you are trying to test. For example, if you were testing adding benefits in the checkout, did you test the prominence of these on previous pages too?

You should take this a step further and also look at your most common traffic sources to see if they caused any issues on the page you were testing, for example maybe the wording used in your Google Ads weren’t matching wording on your entry pages very well.

9: Did you review the test result with a wider audience and brainstorm for ideas?

To increase learnings from your A/B tests, when looking at results you should always get regular feedback and thoughts from key people in related teams like marketing and user experience, at least once per quarter. Creative and design orientated people are ideal for helping improve A/B testing ideas.

And this wider internal feedback is even more important when A/B tests don’t get a winning result. So I suggest you setup a meeting to review all your previous losing test results and brainstorm for better ideas – I’m sure you gain great insights from this wider team. Then to ensure this review happens in the future too, setup a regular quarterly A/B test results review meeting with these key people.

10: Could you move or increase the size of the tested element so its more prominent?

Another common reason for an inconclusive test A/B test result is because the element being tested is not very prominent and often doesn’t get noticed by visitors. This is particularly true for testing elements that are in sidebars or are very low down on pages because these don’t get seen very often.

Therefore, to try and turn an A/B test with no result into a winning test, consider moving the element being tested to a more prominent location on the page (or another page that gets more traffic) and then re-run the test. This works particularly well with key elements like call-to-action buttons, benefits, risk reducers and key navigation links.

This last step of iterating and retesting the same page or element is essential, as it helps you determine whether it will ever have a good impact on your conversion rates. If you still don’t get a good follow up test result that means you should instead move onto test ideas for another page or element that will hopefully be a better conversion influencer.

Get a Free A/B Testing Consultation

To gain even better results from your A/B tests, I can help you with a free A/B testing consultation, and give you a free CRO teardown to improve any page on your website.

I’m a CRO expert with over 15 years of experience, and have increased sales for 100’s of online businesses with my A/B testing services.